The AI Litepaper explores the potential to deploy Large Language Models (LLMs) on POKT Network to provide robust, scalable AI inference.

POKT Network’s protocol has served over 750+ billion requests since its Mainnet launch in 2020 via an experienced network of ~15k nodes in 22 countries, and can enhance the accessibility and financialization of AI models within the ecosystem.

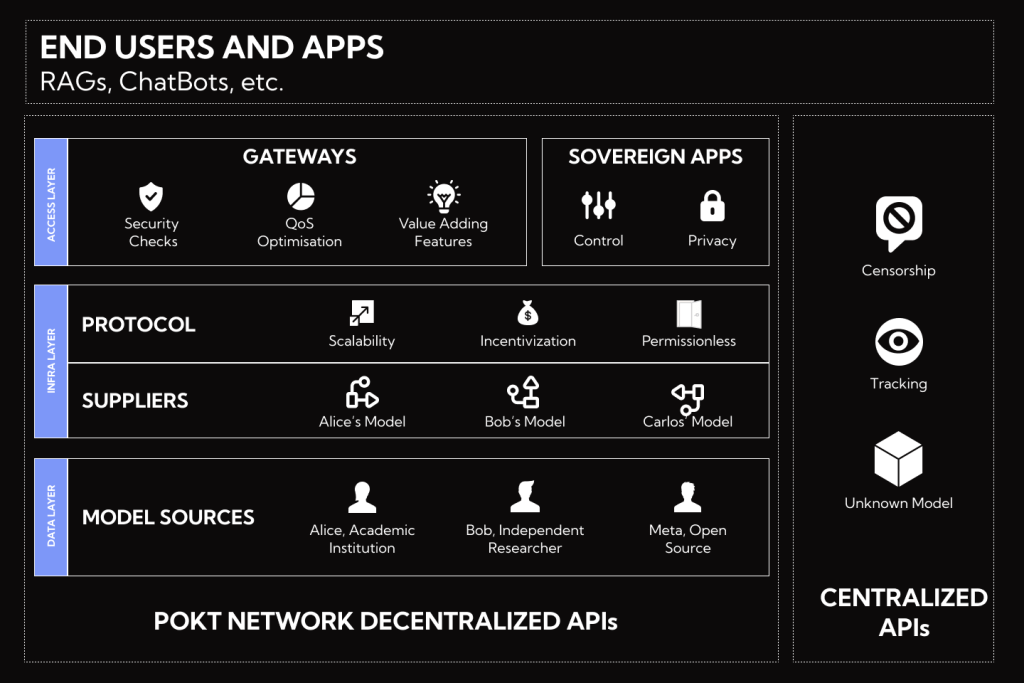

The protocol aligns incentives among model researchers (Sources), hardware operators (Suppliers), API providers (Gateways), and users (Applications) through the Relay Mining algorithm, creating a transparent marketplace where costs and earnings reflect cryptographically verified usage. It is the first mature permissionless network whose quality of service competes with centralized entities set up to provide application grade inference.

The integration of LLMs underscores the potential to drive adoption, innovation, and financialization of open-source models and positions POKT Network as an emerging player in permissionless LLM inference.

Practical Benefits of AI Inference on POKT Network:

- Scalable AI Inference Services: The decentralized framework allows for the scaling of AI services to meet demand, without downtime, by leveraging POKT Network’s existing infrastructure network.

- Monetization for Model Creators: AI researchers and academics can deploy their models on the network, with the potential to earn revenue based on usage without the need to manage access infrastructure or generate their own demand.

- Transparent Marketplace: The Relay Mining algorithm creates a fully transparent marketplace where costs and earnings are based on cryptographically verified usage incentivizing Suppliers to maintain high Quality of Service.

For more detailed insights, access the full AI Litepaper here

POKT Network is the universal RPC infrastructure layer that connects the expanding universe of data sources and services and makes them useful to real people, through an emerging ecosystem of Gateway businesses and sovereign applications. The protocol itself coordinates and incentivises different actors, so that each can do what they do best, enabled by robust and scalable infrastructure that is owned and governed by its users.

The AI Litepaper – Decentralized AI: Permissionless LLM Inference on POKT Network – was authored by Daniel Olshansky, Ramiro Rodríguez Colmeiro and Bowen Li.

Olshansky spent 4 years doing R&D on Magic Leap’s Augmented Reality cloud to enable sparse mapping, dense mapping and object recognition across half a dozen applied research teams. Further, he spent 2 years on the Planner Evaluation team at Waymo doing analysis and verification of the interaction between Autonomous Vehicles and Vulnerable Road Users.

Ramiro is a PhD in signal analysis and system optimization, his work focuses on system modeling and machine learning. During his PhD he focused on medical image analysis where he created and implemented machine learning models for 3D image segmentation, classification and generation using convolutional neural networks, variational autoencoders,generative adversarial models and recurrent networks. Currently he is also an assistant teacher and member of the robotics and artificial intelligence group at Universidad Tecnológica Nacional (Argentina).

Bowen previously was an engineering manager at Apple AI/ML. He led team to bootstrapped Apple’s first LLM inferencing platform, as well as modernized and scaled infrastructure in training, serving, and data processing.